vSAN ESA on ESXi-over-PVE

A little bit of context

vSAN OSA

One of my biggest griefs as a customer regarding the VMware SDDC offering is vSAN OSA. When I first saw it in action, it looked like a “me too” response to Nutanix, which we introduced successfully I believe as a first customer in Paraguay during 2016-ish (if memory serves right).

Also, coming from the background of FibreChannel based solutions like EMC’s vMAX & Hitachi’s VSP it was a really hard sell feature wise (no snapshots, no tiering, no replication, etc) and going with 20 nodes clusters just because we needed capacity for big fat Oracle Databases didn’t make sense (it’s not cost effective)

Another way of seeing it, if you compare vSAN OSA on vSphere to other solutions serving Xen or KVM, it lacks flexibility:

- single pool per cluster

- single disk type

- cache drive as important failure points

When non-ESXi at sight, we would just go with any other alternatives like CEPH or MooseFS (no GlusterFS, nobody likes you….)

On the bright side, it’s an interesting entry point to better alternatives for folks behind technologically: think iSCSI with 1GbE or CIFS/SMB3 served from your Windows Server file server :s

All in all, it makes sense if:

- you’re a VMware shop with very limited infra team(s)

- you want a quick way to introduce a virtualization platform

- there’s no centralized Storage + SAN in place to start with

- your storage needs are not disproportionate in comparison with compute

- you don’t need advanced storage services like taking disks snapshots from your 40TB live production Oracle database (10*4TB + ASM) to present them to a secondary reporting instance.

vSAN ESA

First time I heard about vSAN ESA was in the 2022 VMware Explore event. It’s not perfect, but it’s a step in the right direction.

Just to be clear, I’m quite happy with the evolution. :)

Make sure you watch the Get to Know the Next-Generation of vSAN Architecture on demand VMware Explore 2022 session recording to understand all the goodies.

Going back to our Proxmox nested environment.

With that context, I really recommend you to explore the changes in the new architecture.

If you would like to get your feet wet, vSAN ESA can be deployed in our nested ESX-on-KVM/Proxmox environment.

Of course:

- it won’t be supported, but will allow you to play with it

- actual performance tests will require a property supported physical setup

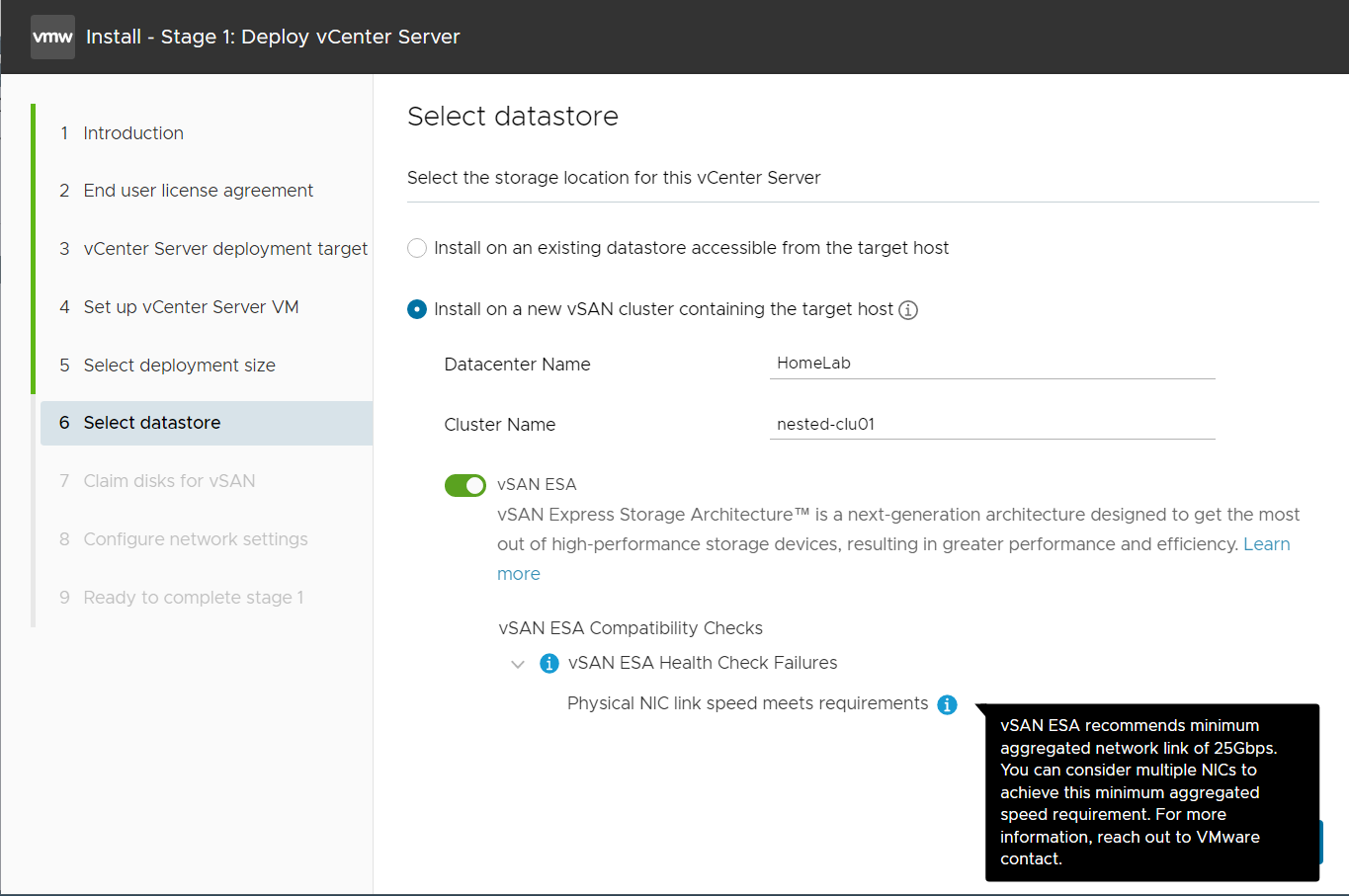

Warnings will be present, because we don’t meet any supported configuration, remarkably:

- 25GbE interfaces

- NVMe controllers

- 512GB RAM per node

Disk controller

In a ideal world, we should use a NVMe controller, which QEMU supports, but Proxmox doesn’t support yet. Given that scenario, we’re going with the default SATA controller.

Provisioning of the disks

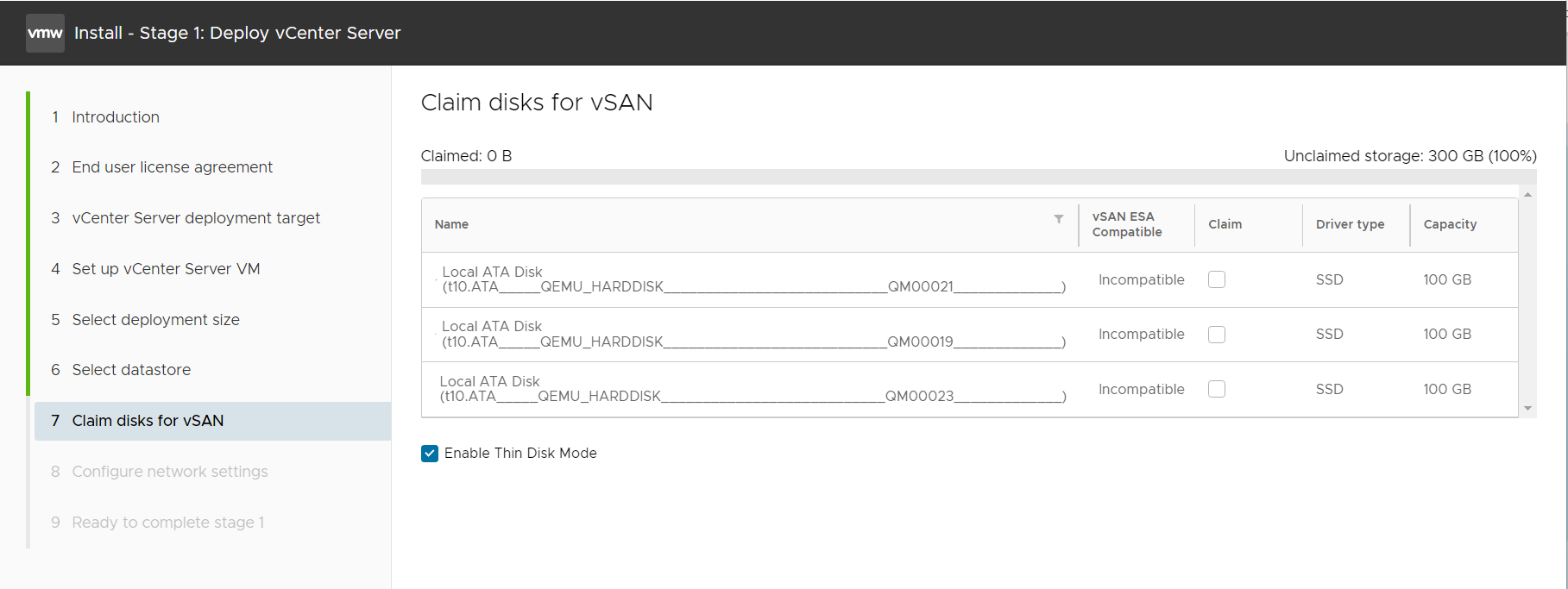

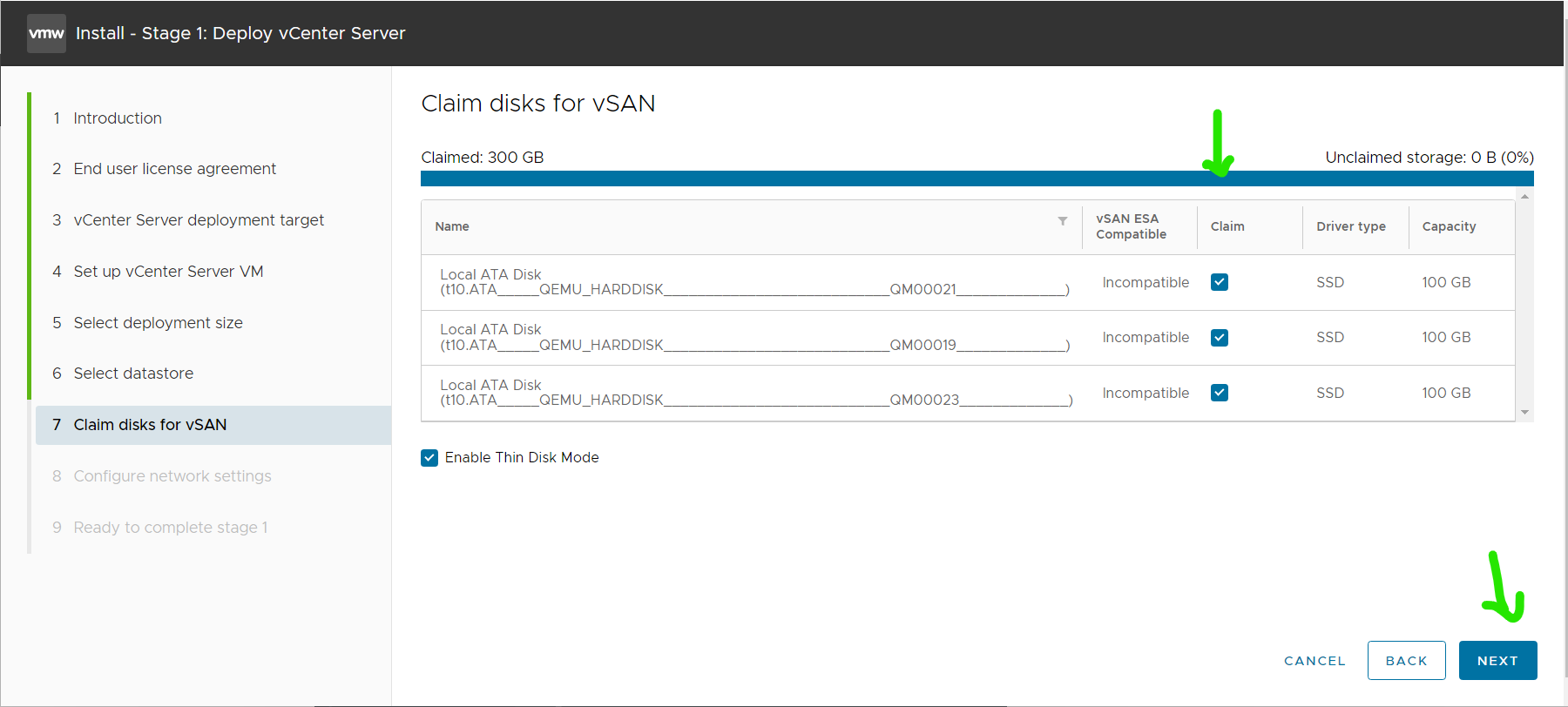

For this test, we’ll take 3 nested ESXi instances and add to each 3 x 100GB disks emulating SSD. You can go with more and/or bigger disks, the sky (your wallet?) is the limit.

STORAGE=dstore01

for VM in esxi01 esxi02 esxi03

do

# We get the ID to operate on the VM

VMID=$(qm list|grep ${VM}|awk '{ print $1 }')

# Adding disks, emulating SSD

qm set ${VMID} --sata3 ${STORAGE}:100,format=qcow2,ssd=1,discard=on \

--sata4 ${STORAGE}:100,format=qcow2,ssd=1,discard=on \

--sata5 ${STORAGE}:100,format=qcow2,ssd=1,discard=on

donevSAN Setup

You can setup the vSAN Cluster as you would normally do, during vCenter Deployment or after having it up & running.

First warning you would see, is that the NICs are not supported (25GbE is mandated). You can keep moving forward eitherway.

If you keep moving forward, disks will be marked as incompatible. Just claim them manually.

Outcome

After some minutes, you should have your working-but-not-supported ESXi-nested-on-PVE vSAN cluster.

Have fun!